Advanced Usage¶

File aliasing¶

The tesseract command can take care of

passing data from local disk

(or any fsspec-compatible resource,

like HTTP, FTP, S3 Buckets, and so on) to a Tesseract via the @ syntax.

You can mount a folder into a Tesseract with --input-path. A The input path is mounted with read-only permissions so a Tesseract will never mutate files located at the input path.

Paths in a Tesseract’s payload have to be relative to --input-path:

tesseract run filereference apply \

--input-path ./testdata \

--output-path ./output \

'{"inputs": {"data": ["sample_2.json", "sample_3.json"]}}'

If you want to write the output of a Tesseract to a file,

you can use the --output-path parameter, which also supports any

fsspec-compatible

target path:

$ tesseract run vectoradd apply --output-path /tmp/output @inputs.json

Logging metrics and artifacts¶

Tesseracts may log metrics and artifacts (e.g. iteration numbers, VTK files, …) as demonstrated in the metrics example Tesseract.

# Copyright 2025 Pasteur Labs. All Rights Reserved.

# SPDX-License-Identifier: Apache-2.0

from pydantic import BaseModel

from tesseract_core.runtime.experimental import log_artifact, log_metric, log_parameter

class InputSchema(BaseModel):

pass

class OutputSchema(BaseModel):

pass

def apply(inputs: InputSchema) -> OutputSchema:

"""This demonstrates logging parameters, metrics and artifacts."""

print("This is a message from the apply function.")

log_parameter("example_param", "value")

for step in range(10):

metric_value = step**2

log_metric("squared_step", metric_value, step=step)

text = "This is an output file we want to log as an artifact."

with open("/tmp/artifact.txt", "w") as f:

f.write(text)

log_artifact("/tmp/artifact.txt")

return OutputSchema()

By default, Tesseracts log metrics, parameters and artifacts to a directory logs in the Tesseract’s --output-path. (Note that, when running Tesseracts in a container, the log directory is placed inside the container.)

Alternatively, you can log metrics and artifacts to an MLflow server by setting the TESSERACT_MLFLOW_TRACKING_URI environment variable. For local development, you can spin up an MLflow server (ready to use with Tesseract) through the provided docker-compose file:

docker-compose -f extra/mlflow/docker-compose-mlflow.yml up

Launch the metrics example Tesseract with the the following volume mount, network and TESSERACT_MLFLOW_TRACKING_URI to ensure that it connects to that MLflow server.

tesseract serve --network=tesseract-mlflow-server --env=TESSERACT_MLFLOW_TRACKING_URI=http://mlflow-server:5000 --volume mlflow-data:/mlflow-data:rw metrics

The same options apply when executing Tesseracts through tesseract run.

As an alternative to the MLflow setup we provide, you can point your Tesseract to a custom MLflow server:

$ tesseract serve --env=TESSERACT_MLFLOW_TRACKING_URI="..." metrics

Note that if your MLFlow server uses basic auth, you need to set the MLFLOW_TRACKING_USERNAME and

MLFLOW_TRACKING_PASSWORD env variables for the Tesseract to be able to authenticate to it.

$ tesseract serve --env=TESSERACT_MLFLOW_TRACKING_URI="..." \

--env=MLFLOW_TRACKING_USERNAME="..." --env=MLFLOW_TRACKING_PASSWORD="..." \

metrics

If you wish to pass additional parameters to the MLflow run (such as tags, run name, or description), you can do so via the TESSERACT_MLFLOW_RUN_EXTRA_ARGS environment variable. This accepts a Python dictionary string that is passed directly to mlflow.start_run(). See supported

parameters in the mlflow documentation.

Example: Setting tags only

$ tesseract serve --env=TESSERACT_MLFLOW_TRACKING_URI="..." \

--env=TESSERACT_MLFLOW_RUN_EXTRA_ARGS='{"tags": {"key1": "value1", "key2": "value2"}}' \

metrics

Example: Setting run name and tags

$ tesseract serve --env=TESSERACT_MLFLOW_TRACKING_URI="..." \

--env=TESSERACT_MLFLOW_RUN_EXTRA_ARGS='{"run_name": "my_experiment", "tags": {"env": "production"}}' \

metrics

Example: Multiple parameters

$ tesseract serve --env=TESSERACT_MLFLOW_TRACKING_URI="..." \

--env=TESSERACT_MLFLOW_RUN_EXTRA_ARGS='{"run_name": "test_run", "description": "Testing new feature", "tags": {"version": "1.0"}}' \

metrics

Volume mounts and user permissions¶

When mounting a volume into a Tesseract container, default behavior depends on the Docker engine being used. Specifically, Docker Desktop, Docker Engine, and Podman have different ways of handling user permissions for mounted volumes.

Tesseract tries to ensure that the container user has the same permissions as the host user running the tesseract command. This is done by setting the user ID and group ID of the container user to match those of the host user.

In cases where this fails or is not desired, you can explicitly set the user ID and group ID of the container user using the --user argument. This allows you to specify a different user or group for the container, which can be useful for ensuring proper permissions when accessing mounted volumes.

Warning

In cases where the Tesseract user is neither root nor the local user / file owner, you may encounter permission issues when accessing files in mounted volumes. To resolve this, ensure that the user ID and group ID are set correctly using the --user argument, or modify the permissions of files to be readable by any user.

Passing environment variables to Tesseract containers¶

Through the optional --env argument, you can pass environment variables to Tesseracts.

This works both for serving a Tesseract and running a single execution:

$ tesseract serve --env=MY_ENV_VARIABLE="some value" helloworld

$ tesseract run --env=MY_ENV_VARIABLE="some value" helloworld apply '{"inputs": {"name": "Osborne"}}'

Using GPUs¶

To leverage GPU support in your Tesseract environment, you can specify which NVIDIA GPU(s) to make available

using the --gpus argument when running a Tesseract command. This allows you to select specific GPUs or

enable all available GPUs for a task.

To run Tesseract on a specific GPU, provide its index:

$ tesseract run --gpus 0 helloworld apply '{"inputs": {"name": "Osborne"}}'

To make all available GPUs accessible, use the --gpus all option:

$ tesseract run --gpus all helloworld apply '{"inputs": {"name": "Osborne"}}'

You can also specify multiple GPUs individually:

$ tesseract run --gpus 0 --gpus 1 helloworld apply '{"inputs": {"name": "Osborne"}}'

The GPUs are indexed starting at zero with the same convention as nvidia-smi.

Deploying and interacting with Tesseracts on HPC clusters¶

Running Tesseracts on high-performance computing clusters can have many use cases including:

Deployment of a single long-running component of a pipeline on a state-of-the-art GPU.

Running an entire optimization workflow on a dedicated compute node

Large parameter scans distributed in parallel over a multitude of cores.

All of this is possible even in scenarios where containerisation options are either unavailable or incompatible by directly using tesseract-runtime (which includes a serve feature). For more details, please see our tutorial, which demonstrates how to launch uncontainerised Tesseracts using SLURM, either as a batch job or for interactive use.

Debug mode¶

tesseract serve supports a --debug flag; this has two effects:

Tracebacks from execution are returned in the response body, instead of a generic 500 error. This is useful for debugging and testing, but unsafe for production environments.

Aside from listening to the usual Tesseract requests, a debugpy server is also started in the container, and the port it’s listening to is forwarded to some free port on the host which is displayed in the cli when spinning up a tesseract via

tesseract serve. This allows you to perform remote debugging sessions.

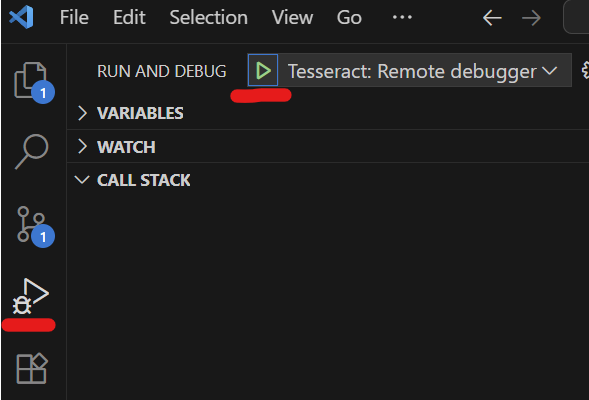

In particular, if you are using VScode, here is a sample launch config to attach to a running Tesseract in debug mode:

{

"name": "Tesseract: Remote debugger",

"type": "debugpy",

"request": "attach",

"connect": {

"host": "localhost",

"port": "PORT_NUMBER_HERE"

},

"pathMappings": [

{

"localRoot": "${workspaceFolder}/examples/helloworld",

"remoteRoot": "/tesseract"

}

],

},

(make sure to fill in with the actual port number). After inserting this into the configurations

field of your launch.json file, you should be able to attach to the Tesseract being served by clicking on the

green “play” button at the top left corner of the “Run and Debug” tab.

For more information on the VSCode debugger, see this guide.